Tim Ziemer

In this research project we develop systematic sound (“psychoacoustic sonification”) to communicate high amounts of data to users. The Psychoacoustic Sonification can serve to complement or replace visualizations and helps us to learn more about the perceptual and cognitive dimensions of hearing.

Psychoacoustic Sonification Research

Auditory Displays present information by means of speech, information-poor sound elements, and information-rich sonification. Sonification can present complex information in an abstract but intelligible way. Psychoacoustic sonification means that known principles of human auditory perception leveraged to maximize precision of data, orthogonality of multidimensional data, and intelligibility of complex data. Psychoacoustic Auditory Displays combine psychoacoustic sonification with additional sound elements.

Psychoacoustic Sonification

Psychoacoustics is a field that aims at translating physical sound input (acoustics) into perceptual, auditory output (psychology). The benefit of including psychoacoustic considerations in sonification design is two-fold. First, psychoacoustic sonification maximizes intelligibility.

|

|

|

|

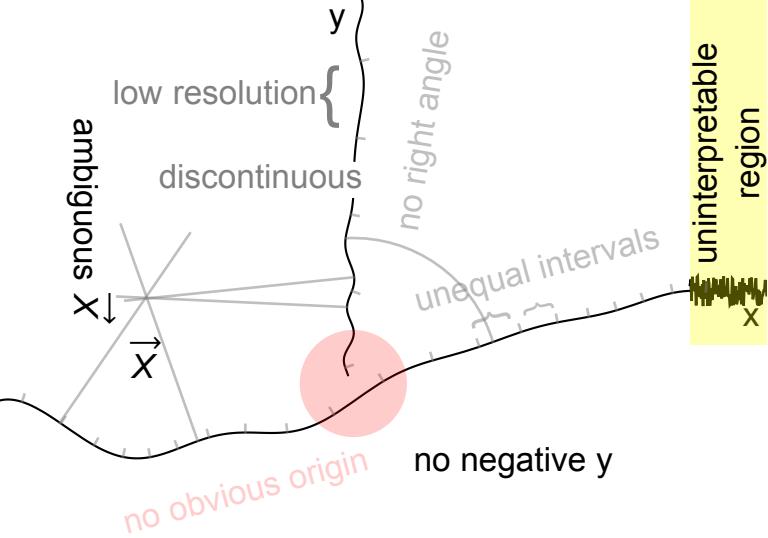

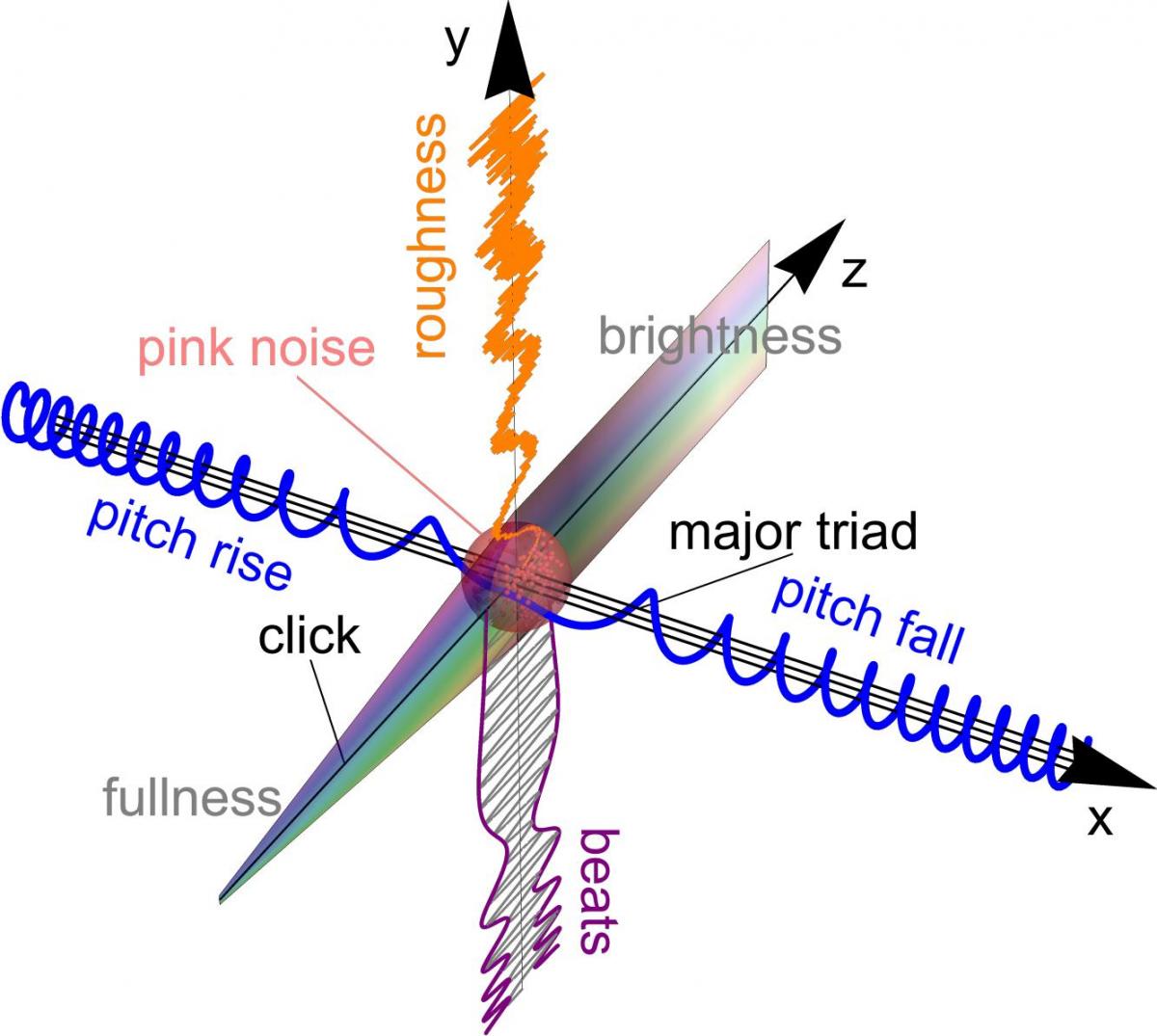

Common problems of sonification designs: The representation of data dimensions is nonlinear, not orthogonal, discontinuous, partly uninterpretable, has a low resolution, no obvious coordinate origin and only one direction per axis (positive OR negative). Consequently, the exact location of each data point may be ambiguous. From (Ziemer et al. 2020). |

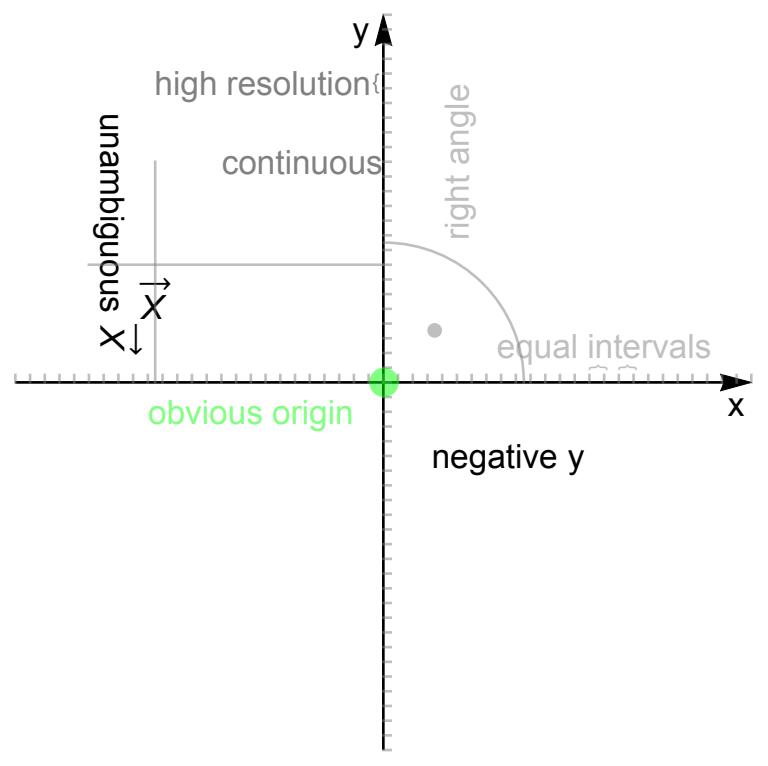

Benefit of psychoacoustic sonification: The representation of data dimensions is linear, orthogonal, continuous, has a high resolution, an obvious coordinate origin and two directions per axis (positive AND negative). Consequently, the location of each data point is unambiguous. From (Ziemer et al. 2020). |

Second, as psychoacoustic sonification is capable of interactive control in real-time, it can serve as a tool for research in the field of interactive psychoacoustics and embodied cognition.

Application Areas for Psychoacoustic Sonification

Application areas for psychoacoustic sonification include

-

audio guidance for drone navigation and image-guided surgery

-

data monitoring, like stock markets or physiologic patient data in the intensive care unit

-

human-computer and human-machine interaction in general

-

audio games, trainers and simulators and augmented reality environments

-

research in the field of interactive psychoacoustics with dynamic sounds, sound embodiment and auditory cognition

Data Visualizations

Data visualizations are all around. Visualizations help us to understand data and data changes, and to orientate in and navigate through (data) space. We use pictures and videos, maps, depictions and symbols, plots, charts, diagrams and text every day. We learned how to read them and intuitively interpret visualizations in every specific context. Unfortunately, this is not the case with sonification.

|

|

|

We see visualizations, like pie charts, plots, maps, GUIs, lists and tables every day. But have you ever heard sonifications, like auditory menus, auditory icons, earcons, auditory graphs and auditory maps? |

Examples of Visual Displays

In some situations, there is too much to see. Such situations can cause “visual overload”: we may get overwhelmed, overstrained or exhausted and overlook, misinterpret or confuse information. Here, psychoacoustic sonification can reduce the cognitive demands.

|

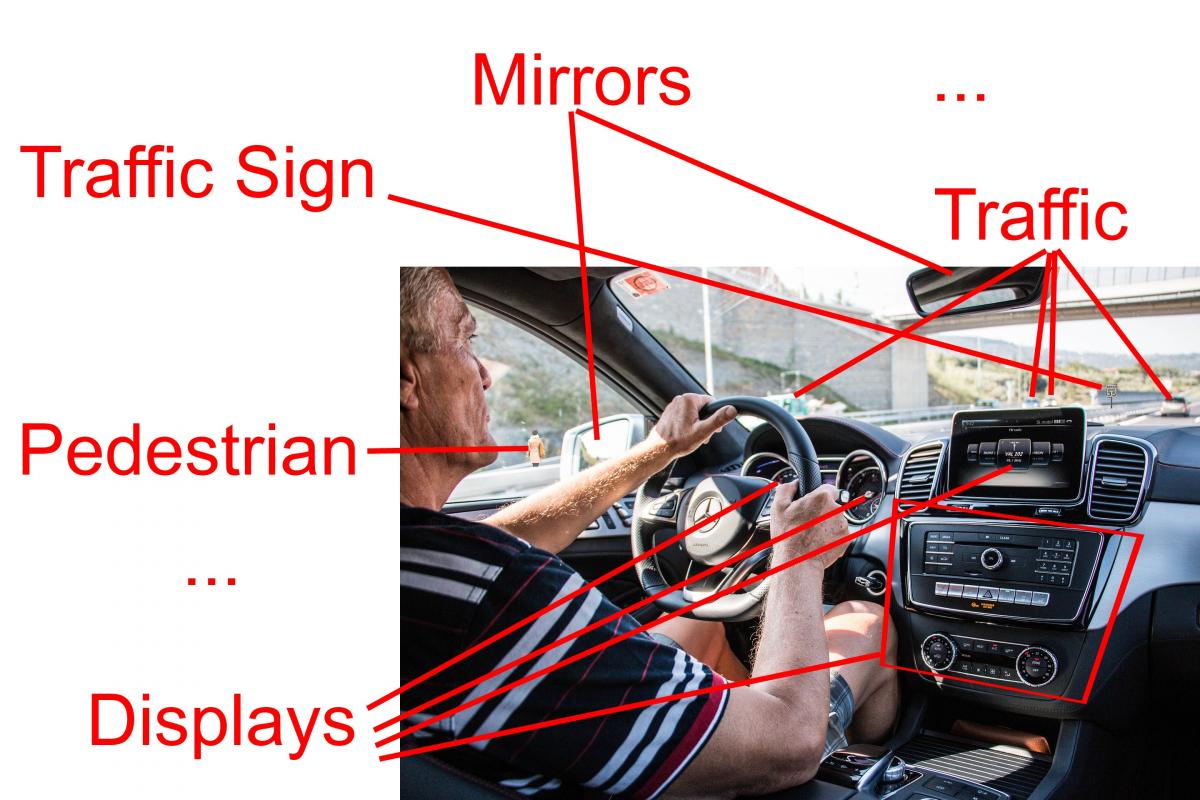

Drivers cannot concentrate on all displays, sat nat, street signs, traffic and environment at the same time. Here, psychoacoustic sonification can deliver some information. For example it can act as acoustic speedometer, tachometer and fuel gauge. |

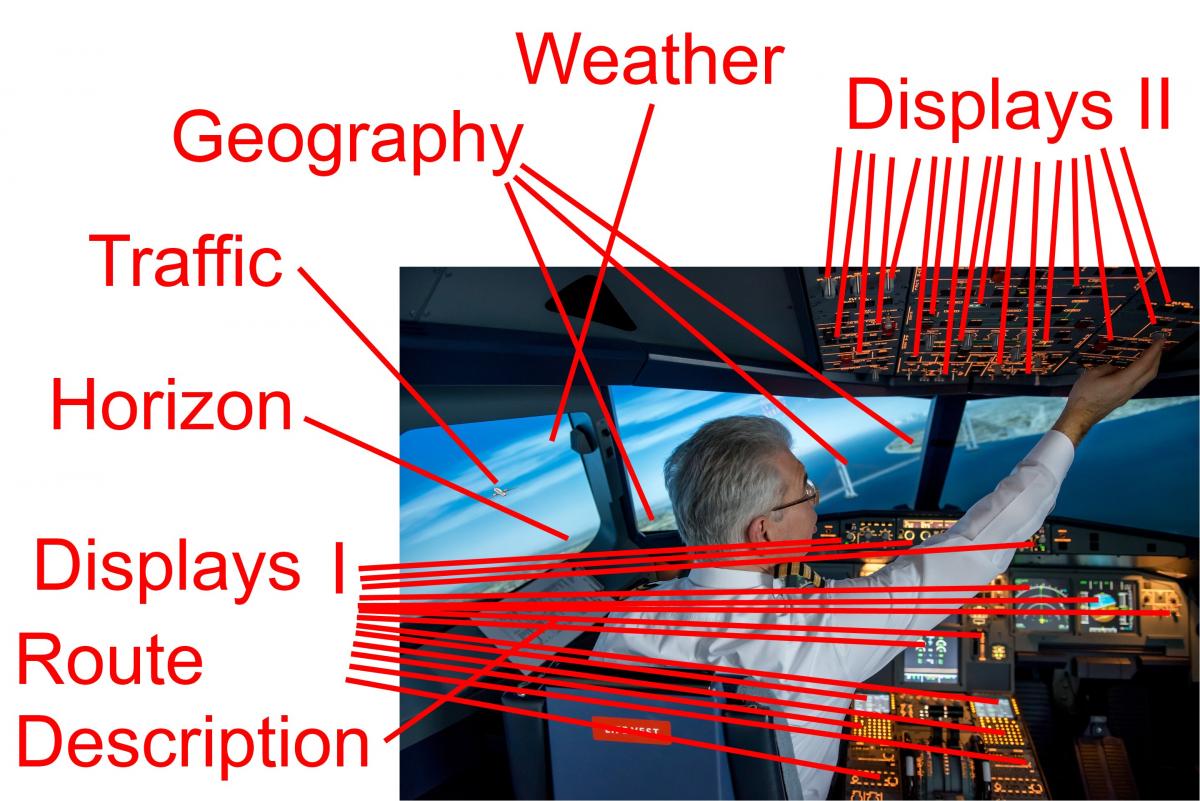

Depending on the maneuver, pilots may need to consult a small subset of the dozens of displays that are distributed over the cockpit. Here, psychoacoustic sonification can inform the pilot about pitch, roll and yaw. |

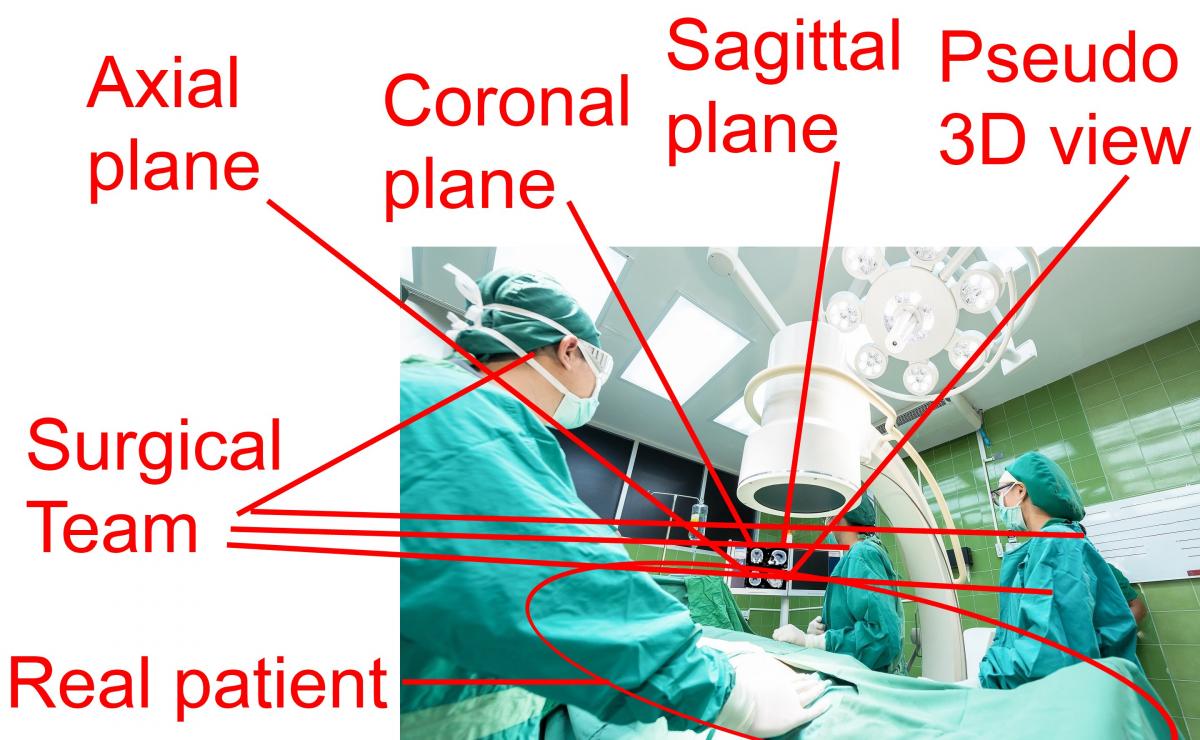

In image guided surgery, monitors show the three anatomic planes plus an augmented pseudo 3D-model of the anatomy. None of the visualizations shows the patient from the viewpoint of the surgeon. Furthermore, looking at the screen implies and unergonomic posture and takes the visual attention off the patient. Here, psychoacoustic sonification could guide the surgeon towards the tumor, past critical structures. |

In other situations, visual displays cannot be consulted, due to their impractical location, or because they would occlude important parts of the visual field or redirect the visual focus away from important visual cues.

|

|

|

|

While aligning a shelf above your head, it is almost impossible to read the bubble level display. There is no direct sight line. The display is occluded by the shelf. One solution is an auditory Spirit Level App on your smartphone: sound waves need no direct sight lines. |

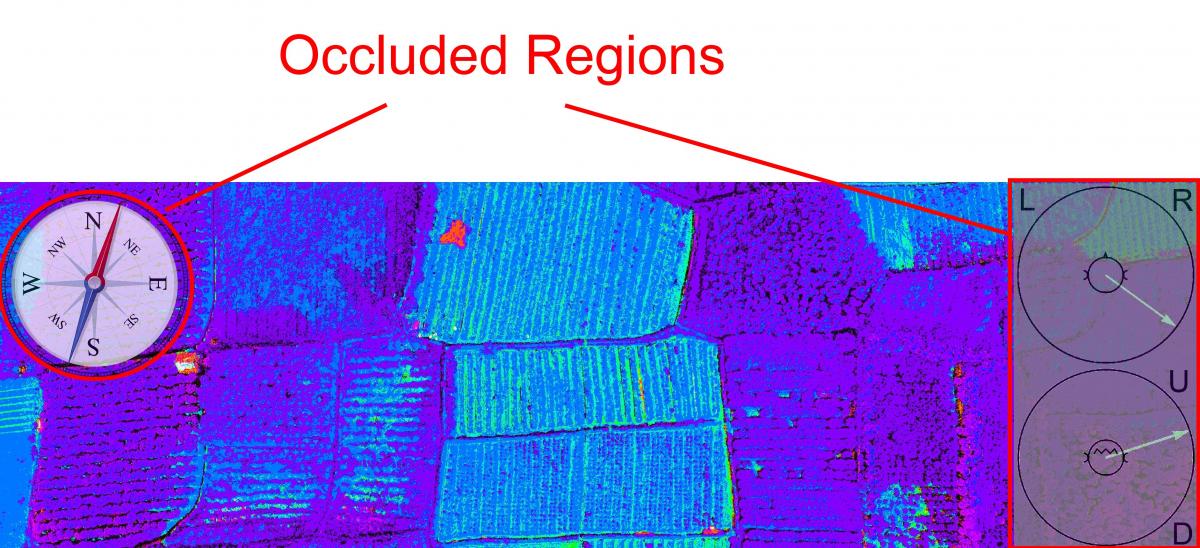

Even semi-transparent depictions can occlude important parts of the camera view in drone navigation. However, psychoacoustic sonification could deliver all necessary navigation cues. Sound neither occludes nor takes your visual attention off the camera view. From (Ziemer et al. 2020). |

Examples of Psychoacoustic Sonification

Psychoacoustic sonification can communicate communicate direct relationships, like a function f(x), or multi-dimensional relationships, like a function f(x, y, z).

Simple Psychoacoustic Sonification

Simple psychoacoustic sonification can be understood quite intuitively, like the auditory spirit level and auditory graphs.

|

Torpedo Level with a bubble indicator (bottom) and Spirit Level App (top): A bubble in the center indicates horizontal alignment. A bubble on the left means: tilt to the right. The further the bubble is off-center, the further you have to tilt. |

Auditory Spirit Level: Steady pitch indicates horizontal alignment. A rising pitch means: tilt to the right. The faster the pitch changes, the further you have to tilt. Tiltification is a two-dimensional spirit level app that utilizes sonification. |

|

A visual graph: Even without further explanation, axis labels and magnitudes, you get an idea about the plotted relationship and can describe what you see. |

Auditory Graph: The absolute value is mapped to pitch. Positive values sound smooth, negative values sound rough. This psychoacoustic sonification gives you about as much information as the visual version. |

These represent one continuous dimension with a high resolution. The spirit level has a clear zero, i.e., an origin of the coordinate system at 0°. In contrast, the auditory graph is relative. Its origin can only be recognized when surpassing it, due to the switch from smooth to rough.

Complex Psychoacoustic Sonification

Complex psychoacoustic sonification can represent large data vectors or matrices. The amount of simultaneously presented information is very high. The benefit of complex psychoacoustic sonification is the high amount of data that is presented simultaneously, unambiguously and linearly to the user. The downside is that its meaning has to be learned. Naturally, this takes time and effort for explanations and training.

|

Visual explanation of the psychoacoustic sonification for three-dimensions f(x, y, z). Users need no more than half an hour of explanation and demonstrations to understand the five sound attributes that describe a three-dimensional space. Most people can learn it, but they never did and they never knew how easy it would be. From (Ziemer et al. 2020). |

Psychoacoustic sonification of a trajectory in three-dimensional space. Without explanations and a learning phase, the sound is hard to interpret. However, trained users can imagine the three-dimensional path just from listening to the sound. |

Application areas of complex, multi-dimensional psychoacoustic sonification include navigation in (image-)guided surgery and surgical training, as well as data monitoring in pulse oximetry.

|

Image-guided surgery and neuronavigation are established practices. Psychoacoustic sonification enables clinicians to carry out complicated interventions by ear. From (Ziemer et al. 2020). |

Psychoacoustic sonification can inform anesthesiologists about the patient’s blood oxygen concentration with high detail. Further body data, like blood pressure, respiration rate, and temperature could be added. Details can be found in (Schwarz & Ziemer 2018). |

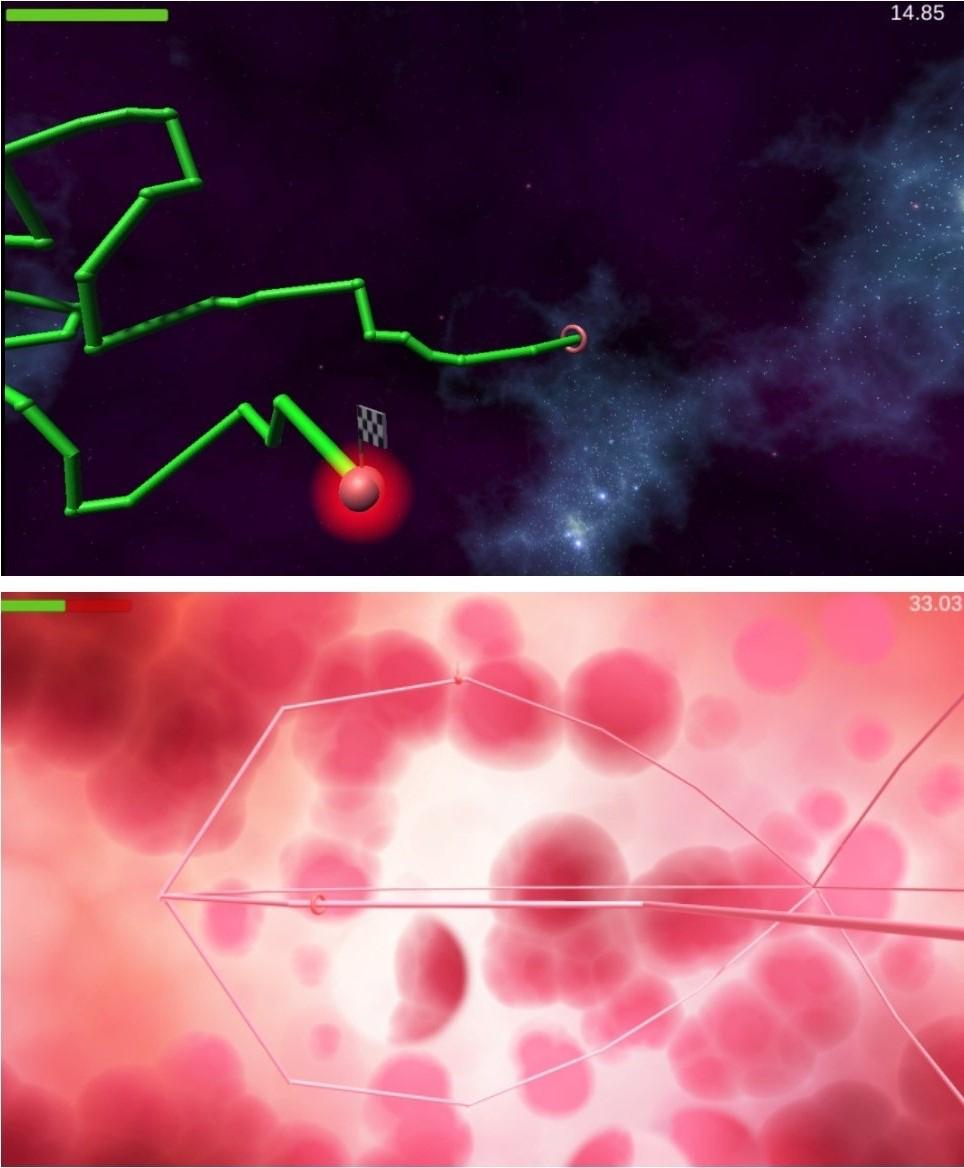

Further application areas of complex psychoacoustic sonification include audio games and flight simulators.

|

|

|

|

Psychoacoustic sonification can be integrated in computer games to complement visualizations, to enable blind gaming and to train your hearing abilities. From the CURAT-website. |

Psychoacoustic sonification can be integrated in flight simulators and actual air planes to help pilots carry out maneuvers. When equipped with some sensors, manned and unmanned vehicles, vessels, and aircraft can be controlled be means of sound. |

More information on Psychoacoustic Sonification

Further information on psychoacoustic sonification can be found on online:

- Support our research by playing our Sonification Game

- Use the psychoacoustic sonification with our audio spirit level: The Tiltification App

-

News and publications on psychoacoustic sonification in medicine can be found on Researchgate.

-

We tweet news from the Bremen Spatial Cognition Center on Twitter.

-

More sound examples and demo videos can be found on YouTube.

-

You can contact Tim Ziemer via e-mail and find his list of publications on Google Scholar.

-

With a YouTube subscription you can watch the latest videos on psychoacoustic sonification.

Group Members:

The psychoacoustic sonification research group has three members

-

Tim Ziemer (Principal Investigator, Bremen Spatial Cognition Center, University of Bremen)

-

Holger Schultheis (Researcher, Bremen Spatial Cognition Center, University of Bremen)

-

Steffen Kleinert, Kashish Sharma, Kilian Krüger and Saleem Ahmad (Student Assistants)

and many partners

-

David Black (Fraunhofer MEVIS, Bremen)

-

Tina Vajsbaher (Bremen Spatial Cognition Center, University of Bremen)

-

Ron Kikinis (Medical Image Computing, University of Bremen, Fraunhofer MEVIS, Bremen, Brigham and Women’s Hospital and Harvard Medical School, Boston, MA, USA)

-

the colleagues from the Project “Intra-Operative Information: What Surgeons Need, When They Need It”

-

the students from the CURAT Bachelor’s and Master’s projects

- the students from the Sonification Apps Master’s project

-

the research interns from the Mahidol University in Nakhon Pathom, Thailand

-

and many colleagues from various research institutions and clinics.

Honors and Awards:

- Community Spotlight Article by the International Community on Auditory Display (ICAD)

- Associate Junior Fellow at the Hanse-Wissenschaftskolleg (Institute for Advanced Study)

- Invited Paper for the International Journal of Informatics Society (IJIS)

-

Keynote Speech at the International Workshop on Informatics (IWIN2019), organized by the Informatics Society Japan

-

Invited Talk at the Jahrestagung der Gesellschaft für Musikforschung (GfM2019)

-

Hyundai Motors Best Paper Award at the International Conference on Auditory Display (ICAD2018)

-

Best Poster Award at the Spatial Cognition 2018.

Publications on Psychoacoustic Sonification:

Journal Papers

Ziemer, Tim & Schultheis, Holger, “PAMPAS: A PsychoAcoustical Method for the Perceptual Analysis of multidimensional Sonification”, in Frontiers in Neuroscience 16, 2022, doi: 10.3389/fnins.2022.930944.

Ziemer, Tim, Nuchprayoon, Nuttawut & Schultheis, Holger, “Psychoacoustic Sonification as User Interface for Human-Machine Interaction”, in International Journal of Informatics Society 12(1) 2020, url: http://www.infsoc.org/journal/vol12/IJIS_12_1_003-016.pdf, doi: 10.13140/RG.2.2.14342.11848 (preprint). [Invited Paper]

Vajsbaher, Tina, Ziemer, Tim & Schultheis, Holger, “A multi-modal approach to cognitive training and assistance in minimally invasive surgery”, in: Cognitive Systems Research 64, pp. 57–72, 2020, doi: 10.1016/j.cogsys.2020.07.005.

Ziemer, Tim & Schultheis, Holger, “Psychoacoustic Auditory Display for Navigation. An Auditory Assistance System for Spatial Orientation Tasks”, in: Journal on Multi-Modal User Interfaces 13(3), pp. 205–218, 2019, doi: 10.1007/s12193-018-0282-2, (free view).

Ziemer, Tim, Schultheis, Holger, Black, David & Kikinis, Ron, “Psychoacoustical Interactive Sonification for Short-Range Navigation”, in: Acta Acustica United With Acustica 104(6), pp. 1075—1093, 2018, doi: 10.3813/AAA.919273.

Conference Papers

Ziemer, Tim, “Visualization vs. Sonification to Level a Table”, in Interactive Sonification Workshop (ISon) 2022, Delmenhorst, September 2022.

Ziemer, Tim & Schultheis, Holger, “Both Rudimentary Visualization and Prototypical Sonification can Serve as a Benchmark to Evaluate New Sonification Designs”, in: Proceedings of the 16th International Audio Mostly Conference, St. Pölten, September 2022, doi: 10.1145/3561212.3561228.

Ziemer, Tim & Schultheis, Holger, “The CURAT Sonification Game: Gamification for Remote Sonification Evaluation”, in: 26th International Conference on Auditory Display (ICAD2021), Virtual Conference, Jun 2021, doi: 10.21785/icad2021.026.

The Sonification Apps Master's Project Team & Ziemer, Tim, “Tiltification – An Accessible App to Popularize Sonification”, in: 26th International Conference on Auditory Display (ICAD2021), Virtual Conference, Jun 2021, doi: 10.21785/icad2021.025.

Ziemer, Tim & Schultheis, Holger, “Psychoakustische Sonifikation zur Navigation in Bildgeführter Chirurgie”, in: Rebecca Grotjahn; Nina Jaeschke (Eds.): Freie Beiträge zur Jahrestagung der Gesellschaft für Musikforschung 2019 – 1, 2020, pp. 347–358, doi: 10.25366/2020.42.

Ziemer, Tim, Höring, Thomas, Meirose, Lukas & Schultheis, Holger, “Monophonic Sonification for Spatial Navigation”, International Workshop on Informatics (IWIN) 2019, Hamburg, Sep 2019, URL: http://www.infsoc.org/conference/iwin2019/download/IWIN2019-Proceedings.pdf#page=95. [Keynote Speech]

Ismailogullari, Abdullah & Ziemer, Tim, “Soundscape Clock: Soundscape Compositions That Display the Time of the Day”, in: 25th International Conference on Auditory Display (ICAD2019), Newcastle upon Tyne, Jun 2019, doi: 10.21785/icad2019.034.

Schwarz, Sebastian & Ziemer, Tim, “A Psychoacoustic Sound Design for Pulse Oximetry”, in: 25th International Conference on Auditory Display (ICAD2019), Newcastle upon Tyne, Jun 2019, doi: 10.21785/icad2019.024.

Ziemer, Tim & Schultheis, Holger, “Psychoacoustical Signal Processing for Three-Dimensional Sonification”, in: 25th International Conference on Auditory Display (ICAD2019), Newcastle upon Tyne, Jun 2019, doi: 10.21785/icad2019.018.

Ziemer, Tim & Schultheis, Holger, “A Psychoacoustic Auditory Display for Navigation”, in: 24th International Conference on Auditory Display (ICAD2018), Houghton (MI): Jun 2018, pp. 136—144, doi: 10.21785/icad2018.007. [Hyundai Motors Best Paper Award]

Ziemer, Tim & Schultheis, Holger, “Perceptual Auditory Display for Two-Dimensional Short-Range Navigation”, in: Fortschritte der Akustik – DAGA 2018, Munich 2018, pp. 1094—1096, URL: https://www.dega-akustik.de/publikationen/online-proceedings/.

Ziemer, Tim, Black, David & Schultheis, Holger, “Psychoacoustic Sonification Design for Navigation in Surgical Interventions”, in: Proceedings of Meetings on Acoustics (POMA) 30(1), 2017, Paper-Number: 050005, doi: 10.1121/2.0000557.

Conference and Journal Abstracts

Ziemer, Tim & Schultheis, Holger, “Linearity, orthogonality, and resolution of psychoacoustic sonification for multidimensional data”, J. Acoust. Soc. Am. 148(4), 2020, p. 2786, doi: 10.1121/1.5147752.

Ziemer, Tim & Schultheis, Holger, “Psychoakustische Sonifikation”, Jahrestagung der Gesellschaft für Musikforschung 2019, Detmold/Paderborn: Sep 2019, pp. 78–79, URL: https://www.muwi-detmold-paderborn.de/fileadmin/muwi/GfM/Programmheft_GfM2019_Web.pdf#page=80. [Invited Talk]

Ziemer, Tim & Schultheis, Holger, “Perceptual Auditory Display for Two-Dimensional Short-Range Navigation”, in: Programmheft der 44. Tagung für Akustik (DAGA), Munich: Mar 2018, p. 306, URL: http://2018.daga-tagung.de/fileadmin/2018.daga-tagung.de/programm/Feb_16_Programmheft.pdf.

Schultheis, Holger & Ziemer, Tim, “An auditory display for representing two-dimensional space”, in: Spatial Cognition 2018, Tuebingen, Sep 2018, https://www.researchgate.net/publication/340050716_An_auditory_display_for_representing_two-dimensional_space_Abstract. [Best Poster Award]

Black, David, Ziemer, Tim, Rieder, Christian, Hahn, Horst & Kikinis, Ron, “Auditory Display for Supporting Image-Guided Medical Instrument Navigation in Tunnel-like Scenarios”, in: Proceedings of the 3rd Conference on Image-Guided Interventions & Fokus Neuroradiologie (IGIC), Magdeburg: Nov 2017.

Ziemer, Tim & Black, David, “Psychoacoustic sonification for tracked medical instrument guidance”, in: The Journal of the Acoustical Society of America (JASA) 141(5), 2017, p. 3694, doi: 10.1121/1.4988051.

Apps

|

|

| Tiltification, available at Google Play Store, Apple App Store, as an APK and as an open source Git is a handy tool used for science communication. | CURAT Sonification Game, available for Windows, MacOS and Android uses Gamification for remote evaluation of the psychoacoustic sonification. |

Press Mentions

Tina Bauer: Nachgefragt: Was führt eine akustische Wasserwaage „vor Ohren”?, DUZ 12/21, Dez. 2021

Michael Derbort: Frei nach Gehör: Tiltification, Smartphone – Das große Handytest- und Kaufberatungsmagazin, Nov. 2021

Helmut Hackl: "Tiltification macht aus dem iPhone eine akustische Wasserwaage", pocket.at – lässige Produkte, leiwande Apps für iOS & Watch, Nov. 2021

Benedikt Bucher: Intelligente Werkzeug-App: Studenten machen es besser als Apple, CHIP Online, Oct. 2021

Andreas Wilkens: "Tiltification: Akustische Wasserwaage fürs Smartphone", Heise Online, Sep. 2021.

Patrick Klapetz: "Das Bild hängt schief: Nun kann man es auch hören", mdr Wissen, Sep. 2021

Benedikt Bucher: "Studenten entwickeln App und übertrumpfen sogar Apple: Darum ist diese Smartphone-App deutlich besser", CHIP Online, Sep. 2021

Ferdinand Pönisch: "Wasserwaage-App: So verwenden Sie Ihr Handy als Wasserwaage", FOCUS Online, Sep. 2021

Nadja Podbregar: "Handy-App als akustische Wasserwaage", scinexx – Das Wissensmagazin, Sep. 2021

SOA: "Anheben, bis es piept", Weser Kurier, Sep. 2021

Florian Fügemann: "'Tiltification' macht Handy zur Wasserwaage", Pressetext.com, Sep. 2021

Stefan Asche: "Mit der Wasserwaage hören, ob alles im Lot ist", vdi nachrichten, Sep. 2021

Market Research Telecast: "Tiltification: Acoustic spirit level for the smartphone", Market Research Telecast, Sep. 2021

iPhone Ticker: "Tiltification: App fungiert als akustische Wasserwaage", iphone-ticker.com, Sep. 2021

Rappelsnut: "Tiltification: Die akustische Wasserwaage", rappelsnut – Wandern, Punkrock und der ganze Rest, Sep. 2021

Heinz Schmitz: Tiltification macht Handy zur Wasserwaage, Heinz-Schmitz.org – Interessantes und aktuelles aus der IT-Szene, Sep. 2021.

Dr. Torsten Beyer: "Nützliche Android-Tools für den Chemiealltag", Analytik-News: Das Online-Labormagazin, Sep. 2021

Nicole Lücke: "Neue App: Handy bekommt ungeahnte Funktion und erweitert unsere Wahrnehmung", INGENIEUR.de, Sep. 2021

TeDo Verlag: "Hätten Sie gewusst…", Automation Newsletter 38 2021, Sep. 2021.

문광주 기자 (Reporter Kwang-Joo Moon): 기울기 측정하는 휴대폰 어플, The SCIENCE plus, Sep. 2021

HanseValley: "Studenten der Uni Bremen bringen akustische Wasserwaage aufs Handy", HanseValley – Der digitale Norden, Sep. 2021

Kai Uwe Bohn: "Let’s Hear if the Painting Hangs Crooked" ("So hört (!) man, ob das Bild schief hängt"), University of Bremen Press Release, Sep. 2021.

International Community on Auditory Display: "Community Spotlight: Tim Ziemer", on: icad.org, Mar. 2021.

PN Verlag: "Vom Gamer zum Lebensretter? Mit psychoakustischer Sonifikation die Chirurgie und das Patientenmonitoring unterstützen", in: KTM – Krankenhaus Technik + Management, Mar. 2021.

Arne Grävemeyer: "Operieren nach Gehör", c’t 21/20, Heise Medien, p. 58.

Kai Uwe Bohn, Anja Rademacher, Britta Plote: "Playing Video Games to Save Lives?" ("Mit 'Daddeln' Leben retten?"), on up2date, Nov. 2020.

RBO: "Akustischer Wegweiser", in: ARS MEDICI 20, 2020, p. 607.

Quintessence-Publishing: "Neue psychoakustische Trainingsmethode für Chirurgen?", on: www.quintessence-publishing.com, Sep. 2020.

ck/pm: "So helfen Töne beim Operieren", Zahnärztliche Mitteilungen, 2020.

Rico-Thore Kauert: "Mit Computerspielen besser operieren", on: StartupMag 2020.

Lieselotte Scheewe: "Durch Töne Wege finden", Bremen Zwei, Sep. 2020.

Frederik Grabbe: HWK in Delmenhorst will Nachwuchs fördern, Delmenhorster Kreisblatt, Sep. 2020

Kai Uwe Bohn: "Playing Games and Saving Lives by Doing So?" ("Spiele spielen – und dadurch später Leben retten?"), University of Bremen Press Release, Aug. 2020

Eddie Gandelman: Hearing Is The New Seeing, prioritydesigns 2019

The project is funded by the Central Research Development Fund (CRDF). I am not responsible for the content of links to external sources.